The Review Bomb Fallacy

Lack of quality, suspect or thin motivation, and more make this community tool fizzle more often than not when it comes to eliciting change.

Normally, as a community manager, we’re all about the numbers, especially when it comes to games and most certainly for games that have a persistent service beyond initial purchase, such as an online component. Metrics such as CCU (concurrent users), number of copies purchased, reach, engagement, and more are some of a CM’s best tools, because they typically provide hard data to back up that all-important measurement of “sentiment”. For those that don’t know, sentiment is that general idea of community opinion that is oftentimes the result of a CM’s perception of how customers view a product or service. When that perception is backed up by the numbers, there’s a greater chance that a development team or company will be more willing to listen to and perhaps pivot based on user feedback.

But there is, unfortunately, one place where the numbers work against this credible assessment, and that’s in the space of user reviews. More specifically, it’s in the commonly-seen practice of “review bombing” - the practice of attacking the aggregate user score on places such as Metacritic, Amazon, and other places that compile them through a flurry of low-scoring write-ups.

The most recent target of this practice in the games industry was Activision Blizzard title Diablo IV. Its first season of content was accompanied by a patch that introduced a ton of things, including some balancing changes that weren’t received very well by the Diablo IV community. At least two classes possessing specific ways of expressing viable gameplay were made less effective, and a slate of intended overall quality-of-life changes (such as increasing the teleport time to town) were seen as unnecessary and inconvenient. Aside from the vastly negative response in user communities for Diablo IV, players took to Metacritic to express their anger and frustration by lowering the Diablo IV user score to a dismal 2.2 as of this writing. Eventually, the above-linked live stream happened, which acknowledged the feedback, promised to essentially never introduce a patch like that again, and put forth some improvements that they feel will be responsive to user feedback. And predictably, I have seen many a comment that said that the review bombing practice was part of the reason why this reversal happened.

They couldn’t be more wrong. And here’s why.

Community managers are obligated to not just look at the numbers, but at the whole picture. Numbers are helpful for support data, as I mentioned before, and for being able to communicate a general sense of what is trending among end-users. But they aren’t the end-all-be-all of reporting proper and accurate user feedback - that comes from a combination of both data and what I call quality opinions, which I define as feedback that tells us an opinion, why that opinion is held, and depending on whether it is positive or negative, tells us what should be done moving forward. This is especially important in games, where future patches and development cycles can be dictated in part by what players, who provide a unique perspective simply by virtue of having not spent their nose buried in the product’s development for years.

Review bombing cannot be consistently relied on for those quality opinions, especially when the motivation for the practice varies so heavily. The Last of Us Part 2, for example, had a massive story spoiler leak which caused the user score to plummet on launch day, well before anyone would have realistically had a chance to play through the hours-long campaign to formulate a credible opinion. The first documented review bomb, for 2008’s Spore, had plenty of poor reviews that stemmed more from the use of DRM than from the game itself. The list goes on - and the problem is that the practice seems to be validated when developers end up pivoting.

Now, don’t get me wrong - I get why users sometimes resort to review bombing, especially when it seems like they aren’t being listened to. And there are plenty of reasons to legitimately submit a negative review if a recent decision or poor user experience warrants it. But because there isn’t any way to feasibly control the quality or motivation of negative user reviews (such as when they are leveraged against a product due to ideological views rather than content evaluation, or for small parts of the game rather than the whole), a mass review-bombing can, after a certain point, be only seen by a community manager as a general idea of negative sentiment, rather than something that solely or even partially drives actual changes or decisions.

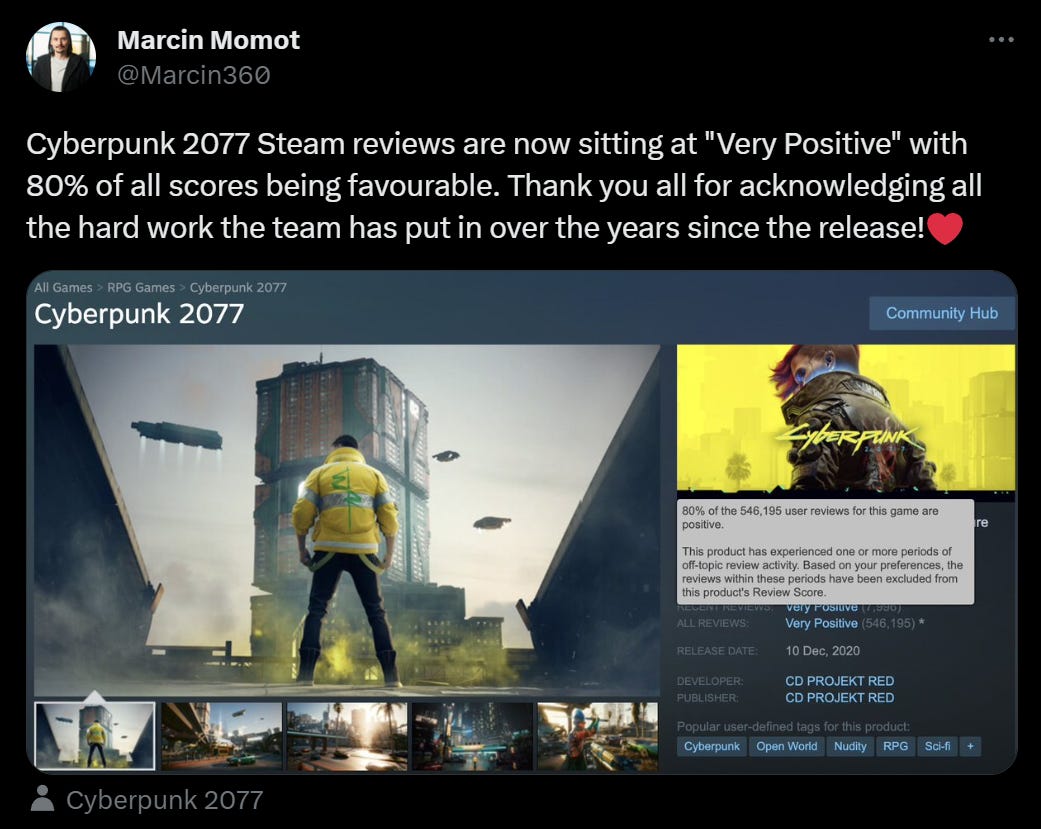

That, by the way, is more driven by those quality opinions that I mentioned before. A negative review that says, in good faith, what sucks, why something sucks, and what can be done to make it suck less is exponentially more valuable for a community manager to pass along than 100 one-star reviews with single lines of frustration. As a CM, I loved that kind of stuff because it gave me meat and potatoes to take back to the development team rather than a bunch of numerical scores. It’s that kind of feedback, not the review bombs, which allows games such as Cyberpunk 2077, a one-time target of review bombing, to be able to make their way back into positive sentiment territory, even if it takes years to do so. Detailed and critical feedback works. Review bombing, in many cases, does not.

As far as Diablo IV is concerned, this isn’t to say that Activision Blizzard wasn’t culpable for releasing such a poorly received patch. I’m a casual player, and even I could see that the communications surrounding the patch and attempting to prepare players for it and its changes could have been done much better (something being addressed, it seems, by livestreams that will do just that in the future). Developers can and should take their cues from a general perception and consistent trend of players being dissatisfied with something (or in some cases being highly supportive of something else). Good community managers and community management teams can support such efforts.

But in the end, the feedback has to be meaningful, even when it’s negative - and review bombs, in many ways and for many reasons, just don’t do that consistently, diluting the value of user reviews significantly. If something changes in a game, I can assure you that it won’t be primarily or even partially because of mass one-star reviews, no matter how many there are - instead, it’ll come from the explanations, details, and constructive criticism that players give, which are oftentimes valued but never given the credit they deserve.